英语原文共 10 页,剩余内容已隐藏,支付完成后下载完整资料

iRobot: An Intelligent Crawler for Web Forums

Rui Cai, Jiang-Ming Yang, Wei Lai, Yida Wang, and Lei Zhang

Microsoft Research, Asia

No. 49 Zhichun Road, Beijing, 100080, P.R. China

{ruicai, jmyang, weilai, v-yidwan, leizhang}@microsoft.com

ABSTRACT

We study in this paper the Web forum crawling problem, which is a very fundamental step in many Web applications, such as search engine and Web data mining. As a typical user-created content (UCC), Web forum has become an important resource on the Web due to its rich information contributed by millions of Internet users every day. However, Web forum crawling is not a trivial problem due to the in-depth link structures, the large amount of duplicate pages, as well as many invalid pages caused by login failure issues. In this paper, we propose and build a prototype of an intelligent forum crawler, iRobot, which has intelligence to understand the content and the structure of a forum site, and then decide how to choose traversal paths among different kinds of pages. To do this, we first randomly sample (download) a few pages from the target forum site, and introduce the page content layout as the characteristics to group those pre-sampled pages and re-construct the forums sitemap. After that, we select an optimal crawling path which only traverses informative pages and skips invalid and duplicate ones. The extensive experimental results on several forums show the performance of our system in the follow- ing aspects: 1) Effectiveness – Compared to a generic crawler, iRobot significantly decreases the duplicate and invalid pages; 2) Efficiency – With a small cost of pre-sampling a few pages for learning the necessary knowledge, iRobot saves substantial net- work bandwidth and storage as it only fetches informative pages from a forum site; and 3) Long threads that are divided into mul- tiple pages can be re-concatenated and archived as a whole thread, which is of great help for further indexing and data mining.

Categories and Subject Descriptors

H.3.3 [Information Storage and Retrieval]: Information Search and Retrieval – clustering, information filtering.

General Terms

Algorithms, Performance, Experimentation

Keywords

Forum Crawler, Sitemap Construction, Traversal Path Selection.

INTRODUCTION

With the developing of Web 2.0, user-created content (UCC) now is becoming an important data resource on the Web. As a typical UCC, Web forum (also named bulletin or discussion board) is very popular almost all over the world for opening discussions. Every day, there are innumerable new posts created by millions of Internet users to talk about any conceivable topics and issues. Thus, forum data is actually a tremendous collection of human knowledge, and therefore is highly valuable for various Web ap- plications. For example, commercial search engines such as

Copyright is held by the International World Wide Web Conference Committee (IW3C2). Distribution of these papers is limited to classroom use, and personal use by others.

WWW 2008, April 21–25, 2008, Beijing, China. ACM 978-1-60558-085-2/08/04.

Google, Yahoo!, and Baidu have begun to leverage information from forums to improve the quality of search results, especially for Qamp;A queries like 'how to debug JavaScript'. It is also noticed that some recent research efforts have tried to mine forum data to find out useful information such as business intelligence [15] and expertise [27]. However, whatever the application is, the funda- mental step is to fetch data pages from various forum sites distri- buted on the whole Internet.

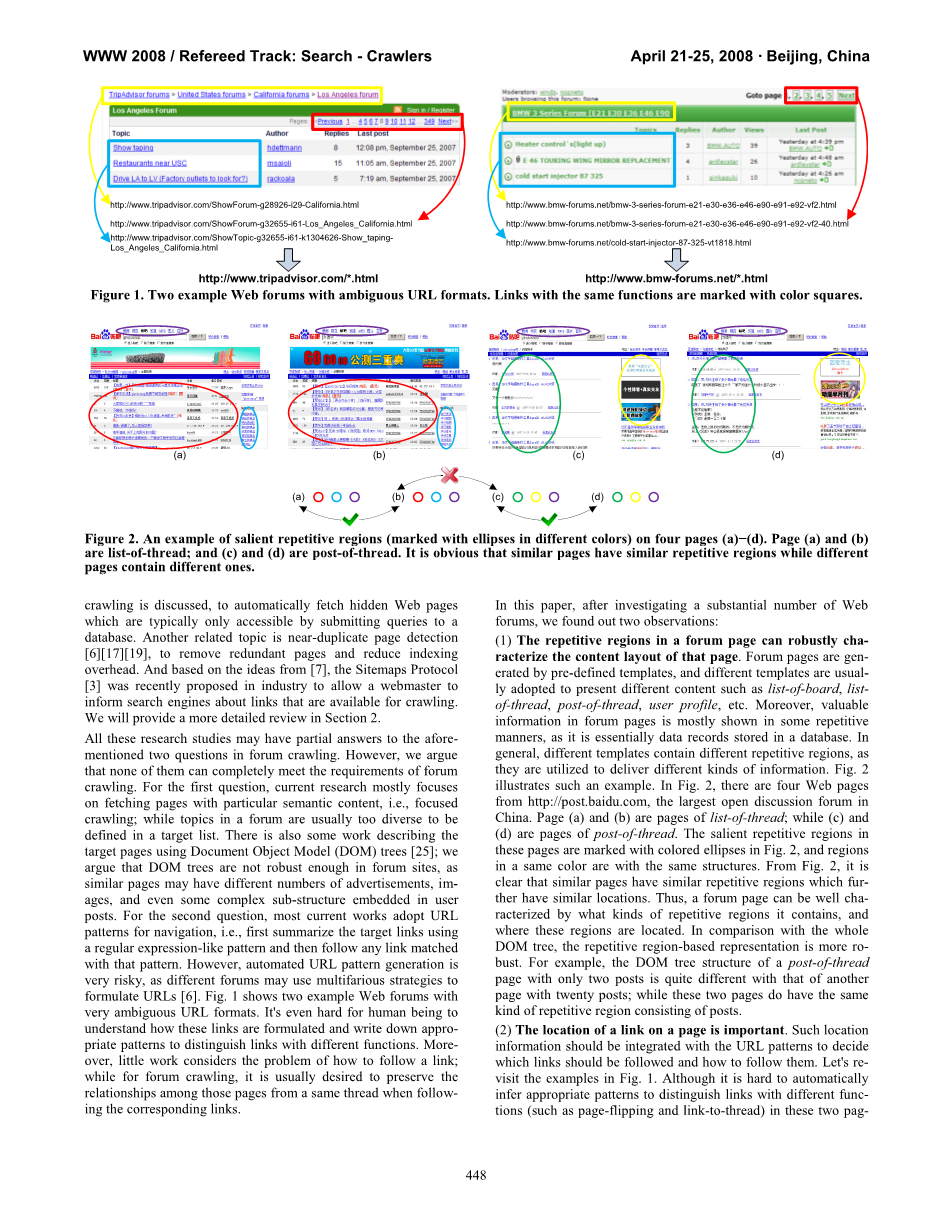

To download forum data effectively and efficiently, we should first understand the characteristics of most forum sites. In general, content of a forum is stored in a database. When a Web forum service receives a user request, it dynamically generates a re- sponse page based on some pre-defined templates. The whole forum site is usually connected as a very complex graph by many links among various pages. Due to these reasons, forum sites gen- erally have the following common characteristics. First, duplicate pages (or content) with different Uniform Resource Locators (URLs) [2] will be generated by the service for different requests such as 'view by date' or 'view by title.' Second, a long post divided into multiple pages usually results in a very deep naviga- tion. Sometimes a user has to do tens of navigations if he/she wants to read the whole thread, and so does a crawler. Finally, due to privacy issue, some content such as user profiles is only availa- ble for registered users.

Generic Web crawlers [8], which adopt the breadth-first strategy [5], are usually inefficient in crawling Web forums. A Web craw- ler must make a tradeoff between 'performance and cost' to bal- ance the content quality and the costs of bandwidth and storage. A shallow (breadth-first) crawling cannot ensure to access all valua- ble content, whereas a deep (depth-first) crawling may fetch too many duplicate and invalid pages (usually caused by login fail- ures). In the experiments we found out that using a breadth-first and depth-unlimited crawler, averagely there is more than 40% crawled forum pages are invalid or duplicate. Moreover, a generic crawler usually ignores the content relationships among pages and stores each page individually [8]; whereas a forum crawler should preserve such relationships to facilitate various data mining tasks. In brief, neither the breadth-first nor the depth-first strategy can simply satisfy the requirements of forum crawling. An ideal forum crawler should answer two questions: 1) what kind of pages should b

剩余内容已隐藏,支付完成后下载完整资料

资料编号:[254379],资料为PDF文档或Word文档,PDF文档可免费转换为Word

以上是毕业论文外文翻译,课题毕业论文、任务书、文献综述、开题报告、程序设计、图纸设计等资料可联系客服协助查找。