英语原文共 6 页,剩余内容已隐藏,支付完成后下载完整资料

8th International Conference on Information Science and Technology

June 30-July 6, 2018; Granada, Cordoba, and Seville, Spain

Research on Information Collection Method of Shipping Job Hunting Based on Web Crawler

Dongcheng Peng, Tieshan Li*, and Yang Wang

Navigation College

Dalian Maritime University

Dalian, China

Email: pdcfighting@163.com, tieshanli@126.com,

wangyang_youth@163.com

Abstract—In recent years, with the increasing development of Artificial Intelligence, Big Data and Cloud computing, etc., the information on the Internet has been booming, so how to obtain target information efficiently and quickly has become an urgent problem to be solved. This article aims at the data collection and acquisition problem of shipping job hunting information under the network environment. In this study, two kinds of information collection methods for shipping job hunting based on web crawler are proposed. Based on the Python standard libraries and Scrapy crawl framework, corresponding web crawler program is designed and implemented to scrape the target information from target website and store the collected data into local file eventually. Through the amount of data crawled and time consuming comparative analysis, the result demonstrates that the data collection method based on the Scrapy crawler framework is simple to operate, easily extensible, featuring being targeted, with high efficiency and fast speed in collecting shipping job hunting information. Fortunately, the collected data can not only help researchers conduct subsequent data mining analysis, but also can provide data support for the follow-up shipping job hunting information database.

Keywords—web crawler; python standard libraries; scrapy crawl framework

I. INTRODUCTION

With the booming development of network technology like the Internet, Internet of Things, etc., Yu Juan discussed that the information on the network was presented as explosive growth [1].There is no doubt that the information on the Internet covers almost all topics such as social activity, culture, politics, economy, entertainment and so on. Using traditional data collection mechanisms (e.g. questionnaires, interviews) is often limited by funding and geographies. Besides, the objective facts are often biased due to small sample result and low reliability of collected data [2]. Therefore, the web crawler as an effective tool which is applied to collect webpage data comes into being.

Web crawler searches for the webpage through URL (Uniform Resource Locator), and returns the concerned data

C. L. Philip Chen

Department of Computer and Information

Science University of Macau

Macau, China

Email: philipchen2000@gmail.com

to users directly. Therefore, users do not need to access information by browsing webpage, which can save time and energy and improve the accuracy of data collection as well [3].The main goal in scraping is to extract structured data from unstructured or semi-structured web pages in order to conduct subsequent data mining and analysis. In addition to the information service websites of companies related with shipping, there are some specialized shipping service websites that provide recruitment or job hunting information. In this paper, the www.hy163.com website is taken as the target website, which is one of Chinas leading website in crew job hunting and recruitment, specializing in crew recruitment, job hunting, enrollment, training, examination, maritime news and other integrated crew information.

II. WEB CRAWLER

A. Definition

Web Crawler, also known as Web Spider or Web Robot or an ant or automatic indexer [4], is one of the core concepts of 'Internet of Things'[5]. It is essentially a program or script that automatically crawls and downloads web information by certain logic and algorithm rules, which is an important component of search engines [6].

B. Web Crawler Theory

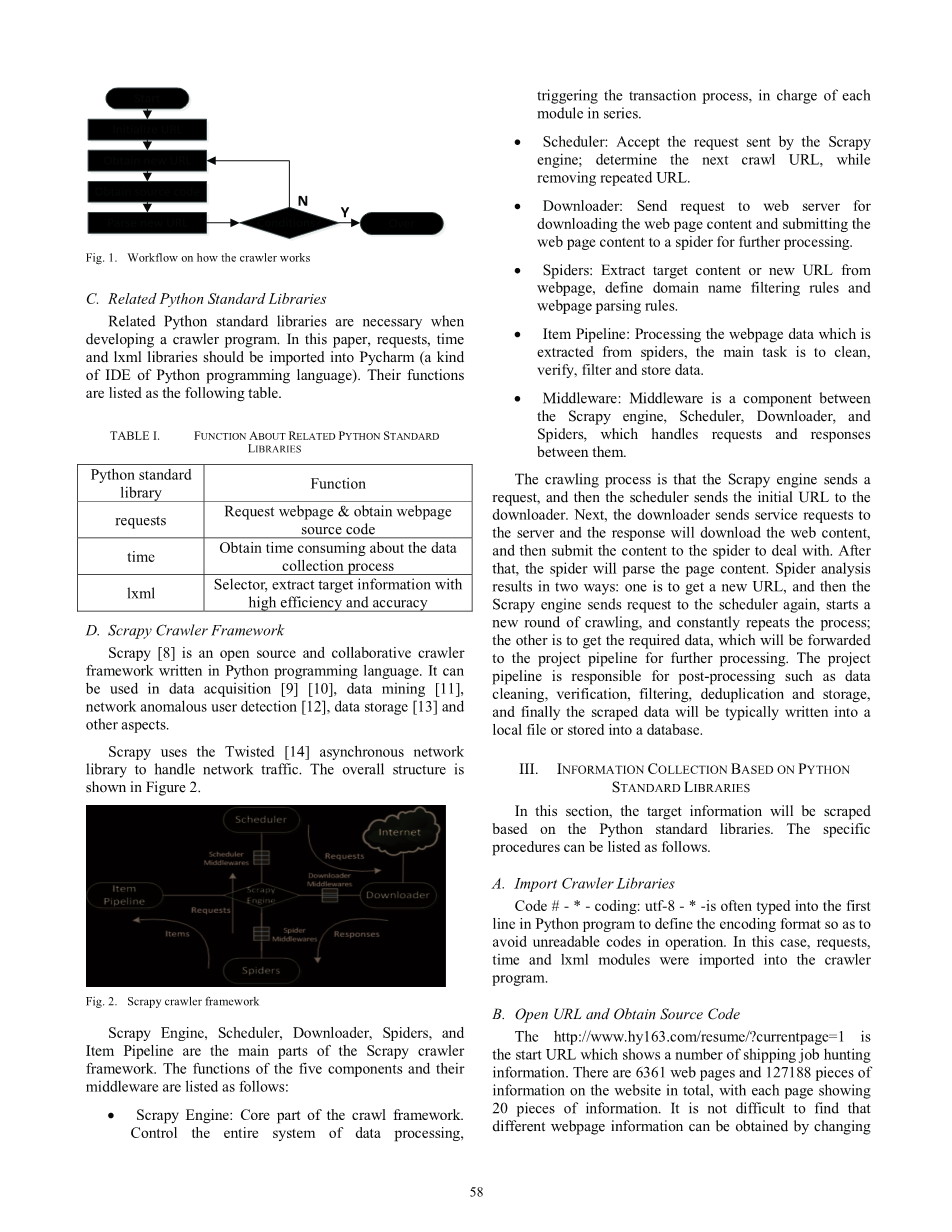

Web crawlers are generally based on pre-set one or several initial webpage URLs. Then follow certain rules for crawling web pages and obtain the list of URLs from the initial webpage. Each time after crawling a web page, the crawler will extract the new URL from web page and place it in the un-crawled queue. Next, URL from the un-crawled queue will be recursively visited and begin a new round of crawling, which repeat the process constantly. The crawler does not end until the URL in the queue has been crawled or other pre-determined conditions been satisfied [7]. Generally, the work flow on how the crawler works is presented by Figure 1.

978-1-5386-3782-1/18/$31.00 copy;2018 IEEE

Start

Initialize URL

Obtain new URL

|

Obtain source code |

||||

|

Parse new URL |

Conditions ? |

|||

|

over |

fs |

Fig. 1. Workflow on how the crawler works

C. Related Python Standard Libraries

Related Python standard libraries are necessary when developing a crawler program. In this paper, requests, time and lxml libraries should be imported into Pycharm (a kind of IDE of Python programming language). Their functions are listed as the following table.

TABLE I. FUNCTION ABOUT RELATED PYTHON STANDARD

LIBRARIES

<td

剩余内容已隐藏,支付完成后下载完整资料</td

资料编号:[254378],资料为PDF文档或Word文档,PDF文档可免费转换为Word

|

Python standard |

Function |

|

以上是毕业论文外文翻译,课题毕业论文、任务书、文献综述、开题报告、程序设计、图纸设计等资料可联系客服协助查找。