Semantic Hashing

INTRODUCTION

One of the most popular and widely used algorithms for retriev- ing documents that are similar to a query document is TF-IDF[15, 14] which measures the similarity between documents by com- paring their word-count vectors. The similarity metric weights each word by both its frequency in the query document (Term Fre- quency) and the logarithm of the reciprocal of its frequency in the whole set of documents (Inverse Document Frequency). TF-IDF has several major drawbacks:

-

- It computes document similarity directly in the word-count space, which can be slow for large vocabularies.

- It assumes that the counts of different words provide inde- pendent evidence of similarity.

- It makes no use of semantic similarities between words so it cannot see the similarity between “Wolfowitz resigns” and “Gonzales quits”.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee.

Copyright 2007 ACM SIGIR.

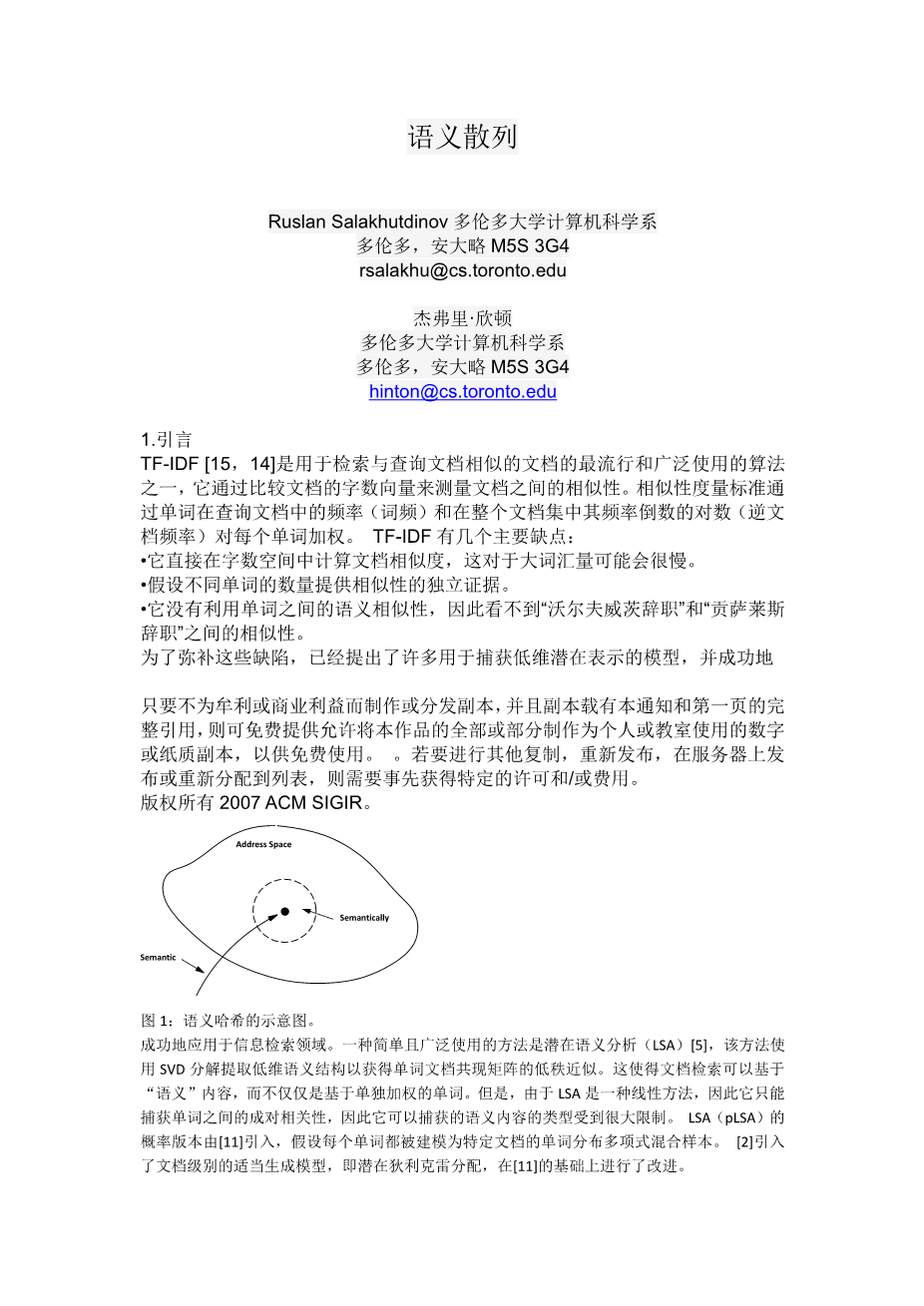

Address Space

Semantically Similar Documents

Semantic Hashing Function

Figure 1: A schematic representation of semantic hashing.

To remedy these drawbacks, numerous models for capturing low- dimensional, latent representations have been proposed and successfully applied in the domain of information retrieval. A simple and widely-used method is Latent Semantic Analysis (LSA) , which extracts low-dimensional semantic structure using SVD de- composition to get a low-rank approximation of the word-document co-occurrence matrix. This allows document retrieval to be based on “semantic” content rather than just on individually weighted words. LSA, however, is very restricted in the types of semantic content it can capture because it is a linear method so it can only capture pairwise correlations between words. A probabilistic ver- sion of LSA (pLSA) was introduced by , using the assumption that each word is modeled as a sample from a document-specific multinomial mixture of word distributions. A proper generative model at the level of documents, Latent Dirichlet Allocation, was introduced by , improving upon .

These recently introduced probabilistic models can be viewed as graphical models in which hidden topic variables have directed connections to variables that represent word-counts. Their major drawback is that exact inference is intractable due to explaining away, so they have to resort to slow or inaccurate approximations to compute the posterior distribution over topics. This makes it diffi- cult to fit the models to data. Also, as Welling et. al. point out, fast inference is important for information retrieval. To achieve this[16] introduce a class of two-layer undirected graphical models that generalize Restricted Boltzmann Machines (RBMrsquo;s) to expo- nential family distributions. This allows them to model non-binary data and to use non-binary hidden (i.e. latent) variables. Maxi- mum likelihood learning is intractable in these models, but learn- ing can be performed efficiently by following an approximation to the gradient of a different objective function called “contrastive di- vergence” . Several further developments of these undirected models show that they are competitive in terms of retrieval accuracy with their directed counterparts.

All of the above models, however, have important limitations.

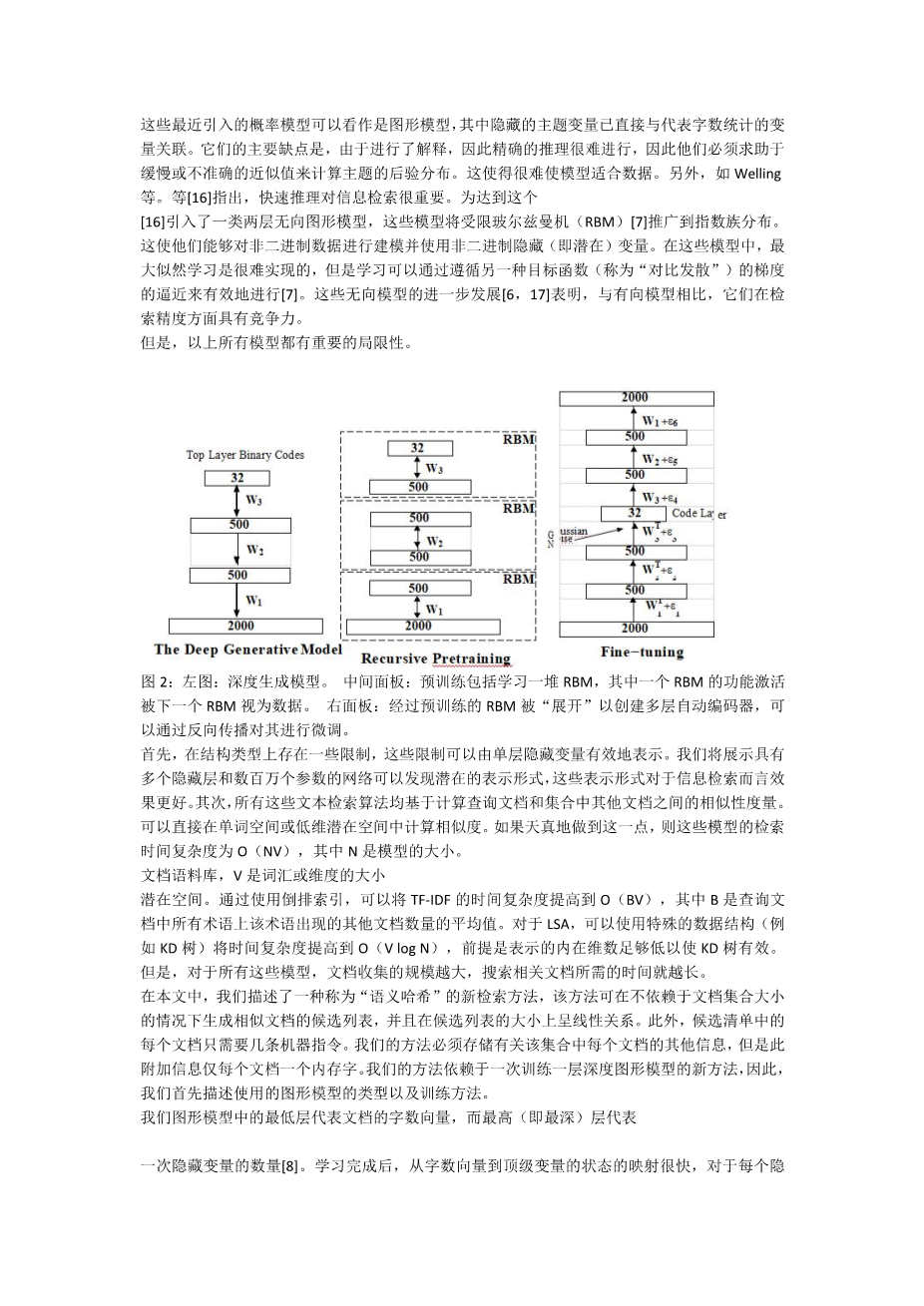

Figure 2: Left panel: The deep generative model. Middle panel: Pretraining consists of learning a stack of RBMrsquo;s in which the feature activations of one RBM are treated as data by the next RBM. Right panel: After pretraining, the RBMrsquo;s are “unrolled” to create a multi-layer autoencoder that is fine-tuned by backpropagation.

First, there are limitations on the types of structure that can be rep- resented efficiently by a single layer of hidden variables. We will show that a network with multiple hidden layers and with millions of parameters can discover latent representations that work much better for information retrieval. Second, all of these text retrieval algorithms are based on computing a similarity measure between a query document and other documents in the collection. The sim- ilarity is computed either directly in the word space or in a low- dimensional latent space. If this is done naively, the retrieval time complexity of these models is O(NV ), where N is the size of the document corpus and V is the size of vocabulary or dimensionality of the latent space. By using an inverted index, the time complexity for TF-IDF can be improved to O(BV ), where B is the average, over all terms in the query document, of the number of other doc- uments in which the term appears. For LSA, the time complexity can be improved to O(V log N ) by using special data structures such as KD-trees, provided the intrinsic dimensionality of the rep- resentations is low enough for KD-trees to be effective. For all of these models, however, the larger the size of document collection, the longer it will take to search for relevant documents.

In this paper we describe a new retrieval method called “seman- tic hashing” that produces a shortlist of similar documents in a time that is independent of the size of the document collection and linear in the size of the shortlist. Moreover, only a few machine instruc- tions are required per document in the shortlist. Our method must store additional information about every document in the collec- tion, but this additional information is onl

剩余内容已隐藏,支付完成后下载完整资料

英语译文共 9 页,剩余内容已隐藏,支付完成后下载完整资料

资料编号:[410046],资料为PDF文档或Word文档,PDF文档可免费转换为Word

以上是毕业论文外文翻译,课题毕业论文、任务书、文献综述、开题报告、程序设计、图纸设计等资料可联系客服协助查找。